Like the famous proverb goes, “A rolling stone gathers no moss”, it is important to keep moving forward, be it in life or in your email marketing campaigns. Stagnation in terms of catering the same type of emails to your subscribers, just because the emails were received well and got astounding open rates earlier, is no longer acceptable.

It is important to modify your emails in accordance with the ongoing trends. But here is the catch- How to find out what’s clicking with most of your subscribers? The answer is- By testing your options or what the email marketers preferentially call…

“A/B testing your email campaign”

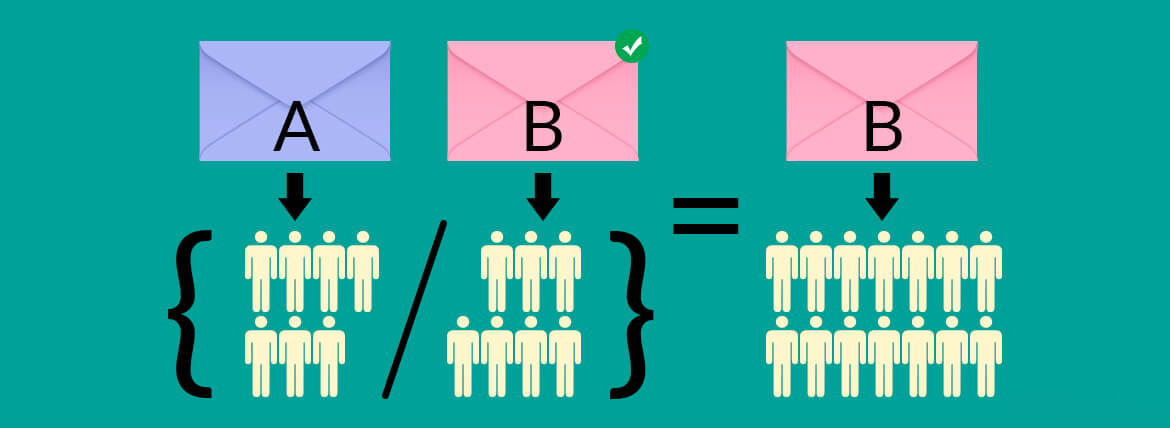

Email A/B testing is basically pitching an email campaign with another version of the same email campaign by making changes to only one element. One version is sent to a set of subscribers and the other to another set of subscribers. The victor is decided based on the metrics collected.

The effectiveness of A/B testing your email campaigns lies in the fact that even though there isn’t a drastic change happening in the email by changing a single element, there can be a tremendous change in the response rate of the campaigns.

Moreover, for accurate results, it is essential that you change only one element at a time. For testing more than 2 versions of the email simultaneously, Multivariate Testing is the best approach.

What elements can be A/B tested?

An email is made of several little components and there is a possibility to test each and every one of them. But marketers generally tend to test the following components:

- Call to action

- Subject line

- Layout of the email

- Customer reviews or Testimonials

- Personalization opportunities

- Body text

- Image placement

- Time & Day of the send

- Rich media email vs. Plain text (yeah even this matters)

Preparing to conduct A/B Testing

Before you conduct A/B testing, it is important to chart out your strategy as in

- what component will be tested

- what needs to be your test group size (your test segments need to be large enough to reach statistical relevance).

- the time period between the send time and analysis time

- the metrics to analyze the winning campaign

Once you have charted out your strategy, it’s time to put the strategy into action. Thankfully, most ESPs have the provision of A/B testing your emails integrated right inside their email editors.

Let’s check out how it works in MailChimp:

You just need to select a new campaign and choose A/B test. (P.S: Using this option you can only choose to test between Subject line, From Name, Email Content, and Send Time)

In A/B campaign page, choose which element you will test, what percentage is your target group size, and metrics to be considered after the time period. Based on your choice, the emails shall be equally sent to the selected percentage and the winning email, based on the selected metrics, shall be sent to rest of the list.

Based on what element you choose to A/B test, you need to input the alternatives in the setup section (as shown above) or in the email template (in case of email copy).

For rest of the components such as CTA copy, email layout, image placements, send times and Plain text version, you shall need to create two different campaigns with variations, segment your email list as per your target group size and analyze manually.

Congratulations! You have successfully learned how to A/B test your email campaigns. But this is not the end of the road, you can put the following tips into practice to improve the effectiveness of your A/B testing.

Let experts audit your email marketing campaign and help you optimize it.

Success stories based on strategic A/B testing

- uSell tested their subject lines to find a winner: uSell specializes in providing cash in return of used electronic devices. As a part of their drip campaign, they send an email encouraging customers to send their device free of charges. Their target was to improve the rate of customers sending their devices i.e. customers taking action after reading the email. To maximize their open rates, they A/B tested their subject line.

Variant A: Your old device is saying, only 1 week left

Variant B: We still haven’t received your {{product_name}}

Variant A scored 24.5% improvement in open rate, the number of users actively sending their devices increased by 7.5%

Variant B scored only 14.3% improvement in open rate yet the number of users actively sending their devices increased by 8.6%.

This increase may be because the email recipient gets the crux of the email and is psychologically aware about sending their devices to uSell. (Source)

- Personalized Call-to-action copy: By replacing ‘My’ with ‘Your’ in the CTA copy, Unbounce observed a drop of 24.91% in the conversion.

- Actionable CTA copy converts quicker: Variance in the tone conveyed by the CTA button also affects the conversion rate significantly. A study by Marketing Experiments revealed the variation of click-throughs when using ‘Try Now’ compared to ‘Get Started Now’.

Tips to improve the effectiveness

Understand your motive: Before you move ahead with A/B testing your email, it is important to understand what your motive is i.e. do you wish to increase your open rate or click-rate, etc.

K-I-S-S (Have a very simplified A/B test): Focus on those variables that shall yield better and more vital results.

Ensure consistency in test campaigns: Ensure that the variation between the two elements is not poles apart.

E.g. for subject lines “Special offer! Buy Now” & “We have a special offer! Just for you” is a good variation.

Learn from your past: Evaluate campaigns from the past to analyze what has worked best and what went ‘dud!’.

Determine your end goals: Decide whether you need to improve your open rates or increase click rates and conduct your test accordingly. In case you have more than one end goal then

- Frame your test according to your goal: Set up one test for each of the elements and pitch your winning email from each such test.

- Split the list wisely: Before you test your email campaign, check if the list is large enough for an A/B split or needs to be broken into several smaller test segments.

Avoid these mistakes:

Not having a valid hypothesis: A hypothesis is the base of an A/B testing where you assume a reason behind your current email performance and based on this hypothesis, you take steps to improve it.

Comparing results from an outdated campaign: Email marketing metrics is subjected to volatility based on time period. Comparing your results of the post-holiday slump i.e. January with the results of the pre-holiday rush won’t give you substantial result.

Changing the metrics mid-way: Even though this may sound absurd, by changing the metrics or your test sample mid-way during the testing, the results shall be skewed. In case you change the parameters, you’ll need to start the test again.

Not segmenting your list before testing: A/B testing needs to be conducted between your subscribers that are like-minded. If you don’t segment your email list before sending your A/B testing, your results are going to be affected since the engagement rate might be skewed.

Wrapping Up

A/B testing can be taxing to those uninitiated, but veteran email marketers swear by it in order to serve nothing but the best to their subscribers and thereby happily reap a great ROI.

Inspired to conduct an A/B testing in your next email marketing campaign? Be sure to share your experience in the comments below.